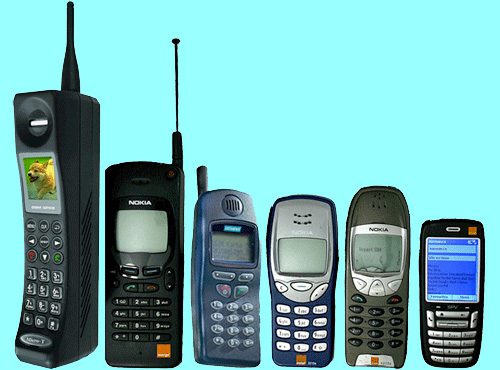

Remember that time when Moore’s law completely disrupted the computing industry?Decades after decades, as computer chips started getting smaller, virtually every electronic company sprang into action to create the next multipurpose smart device. There was a time when phones looked like this:

You could call, text, maybe play snakes at that time, and it had about 6 megabytes of memory – which was cutting edge at the time. Then, every two years, phones got faster and smaller. Storage capacities rose from 8GB to 16GB to 32 GB and so on. This incremental technology we have been witnessing over the years hinges on one key trend – Moore’s Law.

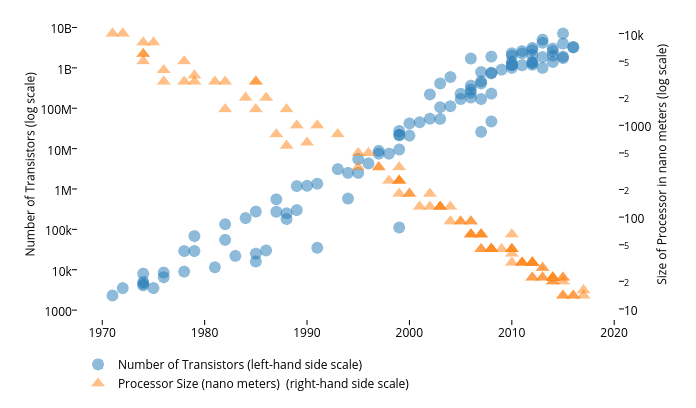

For the unfamiliar, Moore’s Law states that the number of transistors in an integrated circuit will double every year. This prediction was made by Intel co-founder Gordon Moore in 1965 who observed this trend at that time.

Once this was set into motion, over the years chip power went up and cost halved. This exponential growth brought in an exuberant amount of advances in computing. Cheaper and smaller electronics flooded the consumer markets year after year.

To give you a grasp of the minuscule scales we are talking about: A single chip today consists of billion of transistors, and each transistor is about 14 nanometers across.

That’s smaller than most human viruses!

Moore’s Law is Dying?

For Moore’s law to ceaselessly work, chip manufacturers must be able to fit more and more transistors on a silicon chip, resulting into further shrinking of the transistors.

But analysts predict that after 2021, we will have exhausted all our methods to create finer and finer geometries on silicon wafers. In short, the transistor would stop shrinking. Moore’s law comes with an expiration date, but it won’t deter manufacturers to give up innovating on the transistor space.

Also Read: Is There An End To Moore’s Law?

Chip makers have already begun looking into new transistor designs, vertical geometries, and 3D structures.

The end of Moore’s law is ushering in a new revolutionary approach to computing. To power the next wave of electronics, there are a few promising options. One of them is Quantum Computing. Quantum Computing involves the idea of using properties at quantum scale to do very very efficient computing – is one way to forward for faster and more efficient computations.

But another promising idea that has been getting popular is biologically inspired chips – which operate by mimicking our brain function.

In recent years, the quantum computing and AI industries have witnessed significant growth and rising popularity among researchers and tech entrepreneurs. They are considered as a viable option for increasing chip power when Moore’s law diminishes completely

Moving past Moore’s law, which primarily focused on incrementing the number of chips and reduce size, neural chips would now focus on proliferating computing power. They will work by mimicking the human brain on several facets of computing — cognitive function (algorithm), synapses between neurons (devices), and the brain’s circuitry (architecture).

Related: A Reality Check On Quantum Computing

IBM has already embarked upon this mission. It’s first brain-inspired neural chip – the IBM TrueNorth – has begun commercial production. Intel too has been working on brain chips for years now and recently announced its plans to make new investments. Moreover, Qualcomm is still working on the Neural Processing Unit that used to be its Zeroth product.

How did we discover neural chips?

Our brain is an incredible example of unmatched computing processes. Researchers have spent quite a bit of time observing how the brain carries out extremely complex processes in minimal time.

Related: The Future of Quantum Computers

Turns out the brain’s neurons operate in a mix of digital and analog mode. In the digital mode, neurons send discrete signals in form of electrical spikes. Just like a computer’s operations in ones and zeros. But to process incoming signals, it switches to an analog style – adding incoming signals and firing only when a threshold is reached.

Observing this, researchers tried using transistors in a mixed digital-analog mode. This led to a revelation – chips became more energy efficient and robust. This gave rise to the vision of creating a more powerful, energy efficient and robust neuromorphic computer.

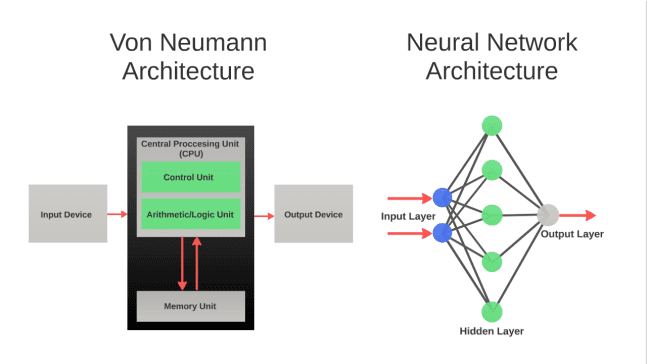

Today, standard chips are built on the Von Neuman architecture – where the processor and memory are separate and data transfers between them. A processing unit (CPU) runs commands that are fetched from memory to execute tasks. On the other hand, Neuromorphic chips utilize a completely different model. They have both storage and processing units connected within ‘neurons’ which communicate and learn together.

The Future

As the industry prepares itself to move away from shrinking transistors, brain-inspired chips are poised to be the future of next generation computing. Neuromorphic chips aim to turn general purpose calculators into machines which learn from experiences and intelligently make future decisions.

The real world applications of such chips are endless. Highly energy efficient for wearables, IoT devices, smartphones, real-time contextual understanding in automobiles, robotics, medical imagers, cameras, and speed efficient multi-modal sensor fusion at an unprecedented neural network scale. While Moore’s law governed the seemingly inexorable shrinking of transistors for fifty years, the inflow of quantum computing and neural chips in computing hold an entirely new and unpredictable future for the next generation of technological revolution.