Hollywood sci-fi blockbusters that keep us glued to the screens, often projecting dystopian versions of the future of humanity are usually regarded as ‘unrealistic’ science-fiction – a depiction that can never come true and is for entertainment value only. Lately though, what has seemed to be mere speculation to us is bridging the gap between itself and reality: self-driving cars, a computer winning at Jeopardy and Siri, Google Now and Cortana are the base of an IT arms race boosted by exceptional investments in the field of Artificial Intelligence.

While there’s a lot of praise we dump on the AI industry for the work it is doing, we forget there can be potentially negative consequences to deal with as well. Astrophysicist Stephen Hawking expressed his concern saying “I think the development of full artificial intelligence could spell the end of the human race.” When asked by the media about his view on AI, Elon Musk described it as “our biggest existential threat” and termed creating an artificial superintelligent entity as “summoning up the demon”. Even Bill Gates answered in the affirmative when asked if he felt the AI was a threat to the humanity.

Related: If Your Robot Commits Murder, Should You Go To Prison?

What exactly is making these experts treat potentially the biggest scientific achievement of our species as an existential threat? When AI is our answer to eradication of war, disease, and poverty – problems that have plagued humanity for centuries, why is everyone hesitant?

Understanding the Underlying Risks

One of the greatest AI threats we could face is the superintelligence control problem. It is obvious that s sufficiently advanced AI would far outstrip humans in terms of capabilities and intelligence. Does that mean we would have no control over the robots running on AI? Considering the superintelligent machines could have far-reaching impacts on the planet (they’ll probably be directly used to shape the world) and that can make predictions about the results of someone hitting their off switch and then using that switch would not be an easy task anymore!

AI-controlled weapons are expected to play a major part in the war zones of the future. AI would mostly certainly up military efficiency. At the same time, this could simultaneously ignite another global arms race.

Related: Where is Artificial Intelligence Headed?

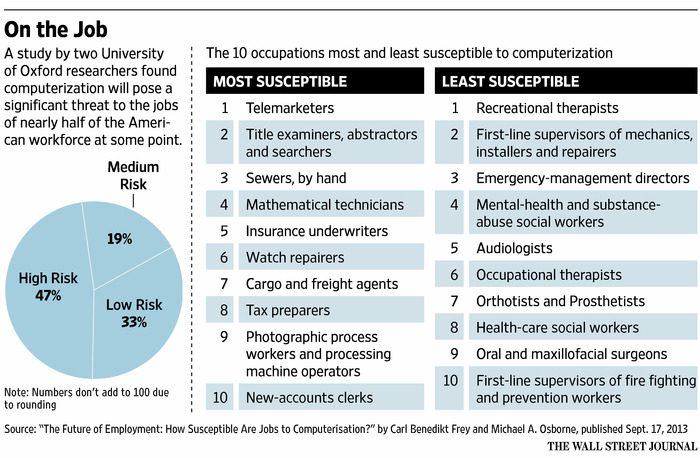

Then of course, there’s unemployment. With AI machines replacing civilians in many jobs, there already is a raging debate on how to best deal with the rising degree of automation in all our industries. There is a constant fear among parts of the global workforce of being ‘replaced’. A study by two researchers from Oxford states that nearly half of the people in the US would lose their jobs at some point to artificial intelligence and automation!

The other major challenge involves looking at AI as something like currency – there will be a need to prevent concentration of superintelligent technology to select authorities and institutes. It is entirely plausible that the first country to achieve superintelligence will manage to maintain its supermacy, because of the unassailable lead it’ll achieve.

Safeguards akin to the Nuclear Industry

Dr Stuart Armstrong, of the Future of Humanity Institute at Oxford University, says: “Humans steer the future not because we’re the strongest or the fastest, but because we’re the smartest. When machines become smarter than humans, we’ll be handing them the steering wheel.”

The one thing setting AI apart is its rate of evolution. Humans exist as they do today, with all their intelligence and intellectual capability, as a result of biological evolution that took millions of years to arrive at today’s stage. For an intelligence residing within silicon chips, this rate of evolution will be unimaginably faster, jumping hundreds and thousands of generations within seconds.

Related: All of Us Will Be Robots by 2030

Consider nuclear power. Sure, it gives us access to huge amounts of clean energy, and delivers many more benefits to our species than damage. But that reason alone has never been enough incentive for a relentless expansion on nuclear energy. Governments and societies all over the world still tread cautiously on nuclear power. This becam especially apparent after the disasters in Japan earlier this decade.

As we’ve seen repeatedly, the world populace would rather be safe than sorry. Stringent safeguards regulate the nuclear industry. It won’t be wrong to say dangers from AI match, if not overtake, the potential dangers from nuclear power. It seems like the obvious thing to do then, is to similarly safeguard ourselves against potential AI dangers.

Many scientists dismiss the very thought of an AI threat, since they believe that the lack of complete knowledge amongst politicians and policymakers of the field would only shift them to an alarmist attitude that’d cut off any growth in the AI industry. Cuts in funding are what stops many researchers from putting forth their fears and the need for AI safety.

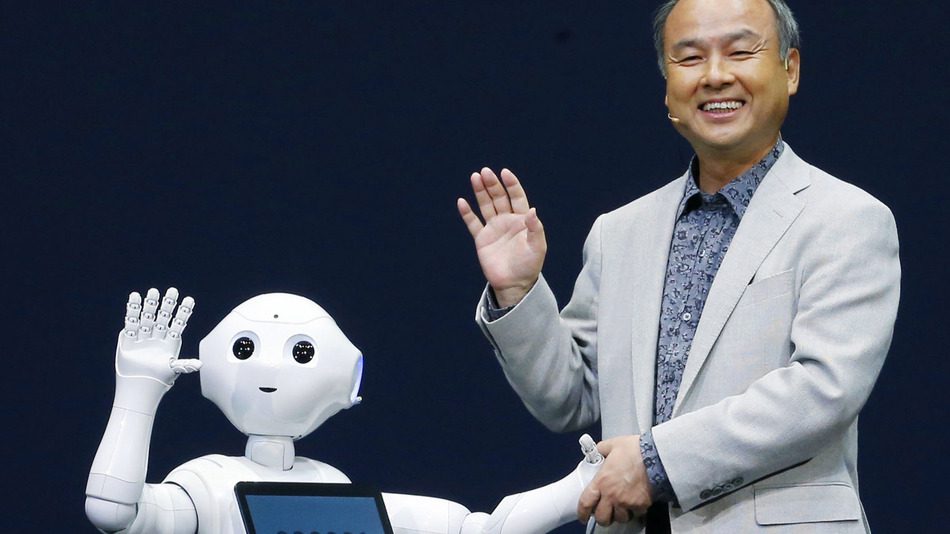

Understanding the need for AI safety, Open AI a non-profit artificial intelligence research company, was setup in December 2015 associated with tech magnate Elon Musk. It aims to carefully promote and develop open-source friendly artificial intelligence to benefit, and not harm, humanity. The organization aims to make the patents and research open to the public by freely collaborating with other institutions and researchers.

There is still a need for greater safety measures in accordance to AI that needs to addressed as the existing measures being taken nowhere go together with the pace AI is moving!