Successful survival asks for evolution and adaptation. This fact stands true for any entity in any scenario; even for leading tech-companies ruling the industry. With buzzwords like AR, AI and VR doing the rounds, all market players need to up their game by creating and designing products that cater to a wider audience space.

Google has already begun its journey towards a company that has delved into versatile technology trends like Internet of Things, Virtual Reality, Artificial Intelligence. It has also invested in many other channels and paradigms of the tech-world viz. making gadgets and software, designing classroom-training tools, designing a virtual assistant and creating products to solve social issues using technology. However, being a search and advertising company at core, Google decided to unveil a product that combines the best of both worlds – the Google Lens.

Related: What is Google 2.0 Going To Do?

Presenting to you… Google Lens!

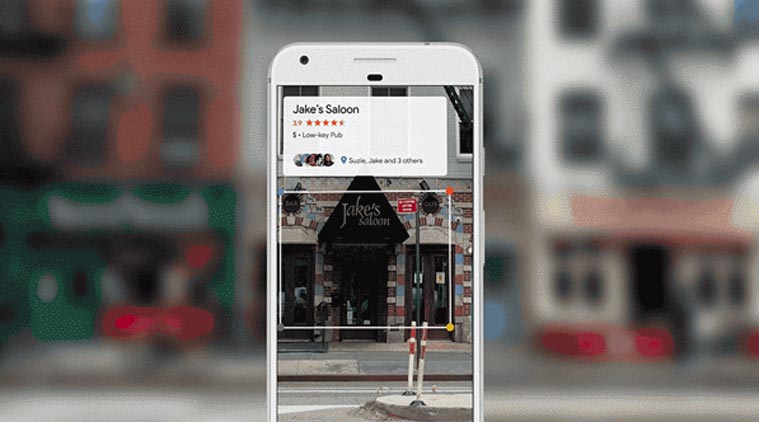

Google I/O 2017, organised earlier in May, had a lot of interesting announcements coming from CEO Sundar Pichai. One of them was Google Lens – a search engine that uses your phone’s camera. The latest step in the world of visual search can be traced back to Google’s Image Search feature that used basic machine-learning. Google Lens, an AI-powered app for object and scene recognition, not only provides information on what is captured by the camera but also enables you to perform basic functions quickly.

For instance, if you capture a restaurant, Lens will allow you to quickly call and make a booking instead of just telling you the name of the restaurant. The impressive demo at the Google I/O also showed how Lens can read the Wi-Fi SSID information stored on a router’s sticker using Optical Character Recognition, and can use the information to automatically log in to the network detected. Set to release later this year, Google Lens will first feature in Google Photos and Google Assistant.

Not so long ago, Google had also launched Word Lens to detect and translate text in any foreign language, from a picture captured by your smartphone’s camera. Goggles was another app that could pull up any information on the painting, bar-coded image or a landmark captured in a frame. However, Lens will no longer be a standalone app and will support all other Google apps to accommodate for functional-versatility.

Google weaves AI and Big Data into the conventional Search feature

Google has fostered a mission of ‘AI first’ – rethinking all its products with a renewed focus on machine learning (ML) and artificial intelligence (AI). Pichai introduced Lens saying, “All of Google was built because we started understanding text and web pages. So the fact that computers can understand images and videos has profound implications for our core mission.” Google Lens is an attempt to revolutionise this device-centric world looks at technology.

Google had started on its vision when it started establishing data centres all across the world with a huge network of computers set in place.With people using its search engine and the email service, these machines were fed with valuable pieces of personal information for around 2 decades. These AI-first data-centres were the foundations of building an app deep-rooted in Big Data, and then eventually a smart-world. As per Pichai, the idea behind the product is to use Google’s computer vision and AI technology to make a smartphone-camera smart.

The massive reserves of visual information and Google’s growing cloud AI infrastructure, helps Lens not only procure information, but also understand the context, figure your location out and predict the users’ next set of actions. Take it a notch higher, and Google’s VPS (Visual Positioning System) could build on Lens’ underlying technology in Tango devices to identify specific objects in the device’s field of vision, like objects on a store shelf.

Google Lens beyond a smart-software

AI and Big Data are not the only tw0 tech-buzzwords Lens can relate to. While showing us ‘just another fancy camera-app’, Google has also hinted at the future of smart-sensing and data-aggregation. What it has done with Lens is combine all general-purpose sensors into one ‘super-sensor’ – the smartphone camera. Sensors like a bar-code reader or a retail-business identifier can now be replaced by a camera. Internet of Things (IoT) can take this as the beginning of the disruption of the “trillion sensor world” revolution.

The concept of a general-purpose sensor coupled with the unstoppable rise of the cloud AI infrastructure could usher us into a world where the data procured by super-sensors could be used for software-based virtual sensors. Google Lens is thus bringing the power of existing technologies like AI, machine learning and IoT deeper into our lives.

Google is not the only one trying to make your camera better

Google’s attempts to shift the world’s focus from being device-centric to AI-centric is only becoming stronger by the day. It contributes to the higher success rate that Google can boast of, as compared to its peers. Samsung with its Bixby Vision on the Galaxy S8, has also ventured into a similar space by building a vision-based version of the digital assistant, Bixby. Earlier in April, Facebook had announced an augmented-reality (AR) platform for its developers that would allow one to experiment with photo and video filters, games and art projects.

Pinterest also has a tool named ‘Lens’ that allows people to point their cameras at real-world items gives results on where to get them from, or finds similar objects online. While we shall learn more about each of these scenario-changing tech-events as they unveil, it is important to note that Google has provided us with another search engine, just with the camera as the input mode, and not a text box. While the privacy of its users shall be a concern, Google Lens claims to be secure and socially acceptable.