Artificial Intelligence (AI) has come a long way in recent years, transforming from a mere concept to a tangible reality. As the capabilities of AI continue to expand, so do the concerns surrounding privacy and AI issues. In this article, we will delve into the intersection of AI and privacy, exploring the key components of AI, its impact on personal privacy, the legal perspective, exploring AI Issues and the role of ethics in AI development.

Understanding AI Issues: A Brief Overview

Before we can fully grasp the privacy issues associated with AI, it is essential to understand what AI entails. At its core, AI refers to the development of computer systems capable of performing tasks that typically require human intelligence. This includes processes like learning, problem-solving, decision-making, and even speech recognition.Exploring the broader landscape of AI issues adds another layer to our understanding of the challenges and considerations in this rapidly evolving field.

Artificial Intelligence has come a long way since its inception, and its evolution has been nothing short of remarkable. From its early conceptualization to its current reality, AI has transformed the way we live and interact with technology.

The Evolution of AI: From Concept to Reality

The concept of AI has been around for decades, but recent technological advancements have brought it to the forefront of our daily lives. The journey of AI began with the pioneering work of computer scientists who sought to create machines that could mimic human intelligence.

Early AI systems were limited in their capabilities and were primarily used for specific tasks. However, with the advent of more powerful computers and the development of sophisticated algorithms, AI started to make significant strides.

Today, AI has become an inseparable part of our modern world. From voice assistants like Siri and Alexa to self-driving cars and recommendation algorithms, AI has permeated various aspects of our lives, making them more convenient and efficient.With technology advancing at such a rapid pace, it is crucial to examine the key elements that make AI work so efficiently.Additionally, it’s important to address AI issues to ensure responsible and ethical development and deployment of these technologies.

What Is the Concept of Digital Privacy?

The idea of privacy has evolved immensely over the past 50 years.

In a pre-Internet age, it mainly referred to physical privacy, and it was much easier to comprehend and control it. People could protect their sensitive information, such as Social Security or credit card number.

However, when the internet and numerous online services were launched, cybercriminals made it possible for personal information to fall into the wrong hands.

But hackers aren’t always responsible for stealing or misusing data. People use a number of different online tools, meaning that they go through a lot of fine print and simply accept terms of service without reading them, thus allowing companies to collect, store, and use their personal data.

With AI and advanced analytics, this problem only becomes more complex, as this technology opens the doors to a number of serious and even dangerous consequences concerning privacy violations, manipulation, and accident

Some of the privacy issues related to the use of AI include:

- De-anonymisation and re-identification. Together with facial recognition, AI can be used to identify and monitor people.

- Discrimination. As a result of de-anonymization and re-identification, AI profiling, and automated decision-making, people can be discriminated against and judged negatively based on sensitive information available about them.

- The opacity of profiling. AI-profiling has become a reality, and the fact that even those who designed such systems can’t always fathom what processes they use makes it difficult to interpret the outcomes and understand whether they are correct or not. This opacity can, thus, have a significant effect on people’s lives.

As the world is still reeling from the Facebook-Cambridge Analytica Scandal, and a stream of subsequent data breaches for which respectable companies were responsible, it’s only logical to think about how artificial intelligence, and its subsets – machine learning and natural language processing – will overcome these challenges. Addressing and mitigating these concerns falls under the broader umbrella of AI Issues, urging us to contemplate the ethical, privacy, and security dimensions in the development and deployment of AI technologies.

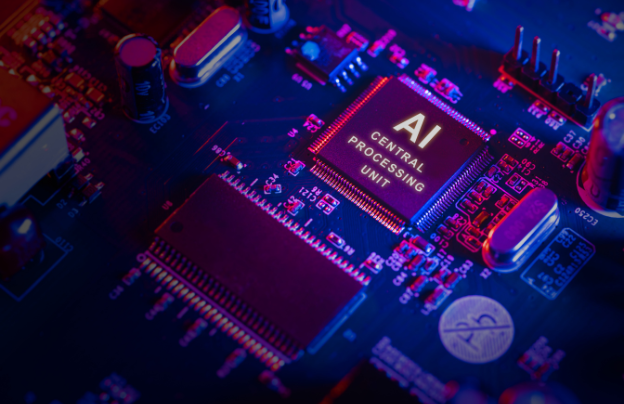

Key Components of Artificial Intelligence

AI systems are built on a variety of components, each playing a vital role in their functionality. These components include machine learning algorithms, natural language processing, computer vision, and neural networks. Let’s study these in detail

- Machine learning algorithms enable AI systems to learn and improve from experience without being explicitly programmed. By analyzing vast amounts of data, these algorithms can identify patterns and make predictions or decisions based on that information.

- Natural language processing allows machines to understand and interpret human language, enabling chatbots and voice assistants to respond intelligently. This technology has revolutionized the way we interact with computers, making it possible to have natural and meaningful conversations with machines.

- Computer vision enables machines to analyze and interpret visual information, facilitating facial recognition and object detection. This capability has found applications in various fields, including security, healthcare, and autonomous vehicles.

- Lastly, neural networks are modeled after the human brain, mimicking its ability to learn and recognize patterns. These networks consist of interconnected nodes, or “neurons,” that process and transmit information. Neural networks have proven to be highly effective in tasks such as image recognition, speech synthesis, and language translation.

As AI continues to advance, researchers and developers are constantly exploring new techniques and technologies to enhance its capabilities. From addressing intricate AI Issues with reinforcement learning to pushing the boundaries of generative adversarial networks, the field of AI is a hotbed of innovation and discovery.

In conclusion, AI is a rapidly evolving field that has the potential to revolutionize various industries and improve our daily lives. Understanding the key components and the evolution of AI is crucial in navigating the complex landscape of this transformative technology. Additionally, staying informed about AI issues is essential for addressing the challenges and ethical considerations associated with its advancement.

The Intersection of AI and Privacy

As AI becomes increasingly prevalent in our lives, it intertwines with personal privacy in numerous ways. One significant concern stems from AI’s role in data collection.

AI systems heavily rely on data to function effectively. They gather vast amounts of information from various sources, including online platforms, IoT devices, and even surveillance cameras. This data collection allows AI systems to offer personalized experiences and tailored recommendations, improving our daily lives. For example, AI-powered virtual assistants can learn our preferences and habits to provide us with more relevant and useful information.

However, the extensive data collection by AI systems also raises privacy concerns. Individuals may feel uneasy knowing that their personal data is being collected, stored, and analyzed by AI systems. Questions arise regarding who has access to this data, how it is being used, and whether it is being adequately protected. AI issues and personal privacy have a complex relationship. On one hand, AI systems can enhance privacy by automating tasks and ensuring data security. For instance, AI algorithms can detect and prevent cyber attacks, safeguarding our sensitive information. Additionally, AI can help anonymize data, removing personally identifiable information to protect individuals’ privacy.

In conclusion, the intersection of AI and privacy, often fraught with nuanced AI issues, as well as AI privacy, is a complex and evolving landscape. While AI systems have the potential to enhance our lives, it is crucial to address privacy concerns and ensure that individuals’ personal information is protected. By implementing robust regulations and fostering transparency in addressing AI Issues, as well as exploring privacy-preserving technologies, we can navigate this intersection and harness the power of AI while safeguarding our privacy.

The Rising Concerns about AI and Privacy

While AI offers remarkable advancements and conveniences, it also raises legitimate concerns regarding individual privacy.

AI’s Impact on Individual Privacy

AI’s ability to analyze vast amounts of data can lead to the creation of detailed profiles and individuals being subjected to targeted advertising and messaging. This personalized approach, while showcasing the capabilities of AI, can inadvertently raise concerns related to AI Issues, as it may infringe upon an individual’s right to privacy by intruding into their personal lives and influencing their decisions.

Furthermore, the potential for AI Issues, including data breaches and leaks in AI systems, poses significant threats to personal privacy. If sensitive information falls into the wrong hands, individuals may find themselves susceptible to fraud, identity theft, and other malicious activities.

The AI Issue: Data Problem

Brands and marketing agencies have always relied on having detailed data about their audiences in order to be able to create relatable and engaging campaigns. This allowed them to target their prospects with the right marketing message as well as predict their purchasing behaviour.

Back in the day, it wasn’t that easy to collect, process, and interpret huge volumes of customer data. But, as the world started to go digital, various data collecting tools and tactics appeared, which facilitated the process and offered marketers an opportunity to gain valuable insights into their audience’s preferences, needs, and problems. owever, alongside these advancements, AI issues have also become a topic of concern, raising questions about privacy, bias, and the ethical use of artificial intelligence in handling sensitive customer data.However, as all these bits and pieces of information were scooped from different sources, they were highly unstructured, meaning that it was impossible to make sense of them.

Until artificial intelligence and big data analytics appeared. From that point on, one of the core roles of AI is to analyze both structured and unstructured data, interpret it, and address emerging challenges such as AI privacy. This technological advancement has significantly enhanced our ability to extract valuable insights from the vast sea of information. Yet, along with the promises and benefits, AI also brings forth concerns, including those related to AI privacy, collectively referred to as “AI Issues.”And as it’s usually the case with data, the issue of privacy appears.

The Debate: AI Advancements vs Privacy Rights

The advancement of AI technology raises an important question: how can we strike a balance between embracing AI’s potential while safeguarding privacy rights?

On one side of the debate, supporters argue that AI can enhance security measures, automate processes, and improve our lives in many ways. They believe that the benefits provided by AI outweigh the privacy concerns it raises.

Others, however, express concerns about the potential for misuse and intrusive surveillance, particularly in the context of AI issues. They emphasize the need for stringent regulations and safeguards to prevent AI from encroaching upon personal privacy.

The Legal Perspective: AI and Privacy Laws

The legal landscape surrounding AI and privacy rights is complex and ever-evolving. Existing legislation often struggles to keep up with the rapid pace of technological advancements.

Current Legal Framework for AI Privacy

Currently, various privacy laws offer some protection against AI-related privacy infringements. The General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States are two notable examples.

These laws require organizations to obtain informed consent before collecting personal data, provide individuals with control over their data, and establish mechanisms for data deletion.

The GDPR

This set of regulations is the first step towards improving privacy in the world of AI, but there’s a catch. Companies won’t be allowed to collect data unless they can give assurance that they understand its value.

Many companies find it difficult to do that because they can’t always be sure of that in advance. This means that the GDPR can hinder their innovation capacity.

Federated Learning

This decentralised AI framework, which is distributed across millions of devices, enables scientists to create, train, improve and assess a model based on a certain number of local models.

The key factor is that in this approach companies don’t actually have access to user’s raw data or an option to label it. In other words, this technology, which is a synergy of AI, blockchain, and IoT, protects users’ privacy and still offers the benefits of aggregated model improvement.

Differential Privacy

Many applications, such as maps, collect individual users’ data so that they can make traffic predictions and offer the fastest route recommendations. At the moment, it’s theoretically possible to identify individual contributions to these aggregated data sets, which means that it’s also possible to expose the identity of every single person who makes a contribution. This raises significant AI issues related to privacy and data security.

Differential privacy randomizes the entire process, thus making things haphazard and preventing the possibility of tracing back the information and identifying individual contributors. This approach is particularly crucial in safeguarding AI privacy and addressing potential AI issues, ensuring that the data used in artificial intelligence models remains secure and free from breaches.

Homomorphic Encryption

This approach leverages machine learning algorithms that deal with encrypted data, meaning that it makes sensitive information inaccessible.

In a nutshell, the data can be encrypted and analyzed by a remote system, while the results will be sent back in encrypted form too. A unique key is used to decipher and unlock data. The entire process can be conducted by protecting the privacy of users whose sensitive information is being used in the analysis.

It’s clear that researchers work on finding the best way to make the most of artificial intelligence but not at the expense of exposing and compromising sensitive information. In the future, we can expect that this entire process will be much more sophisticated, addressing and mitigating potential AI issues. Michael has been working in marketing for almost a decade and has worked with a huge range of clients, which has made him knowledgeable on many different subjects. He has recently rediscovered a passion for writing and hopes to make it a daily habit. You can read more of Michael’s work at Qeedle.

The Need for New Legislation in AI Privacy

While existing legislation provides a foundation, many argue that new laws explicitly addressing AI privacy and AI issues are necessary. As AI technology evolves, it becomes increasingly important to establish clear boundaries and enforce accountability.

Furthermore, comprehensive legislation would help ensure that AI development adheres to ethical standards and respects individual privacy rights.

The Role of Ethics in AI and Privacy

When it comes to AI and privacy, ethical considerations play a crucial role in guiding development and usage practices.

Ethical Considerations in AI Development

Developers and organizations have a responsibility to embed ethical considerations into the development process of AI systems. This includes ensuring transparency, fairness, accountability, and the protection of privacy rights.

By adopting ethical frameworks to address AI issues, organizations can mitigate potential risks, demonstrate responsible AI practices, and cultivate trust among users.

Is There a Way to Overcome AI Issues?

We can’t deny that artificial intelligence is something that improves our lives and makes things easier. However, this tremendous potential has to be properly harnessed in order not to wreak havoc in the arena of privacy.

But it’s worth the effort, as AI powers numerous other tech advancements that can lead to seismic shifts in the way we do things. Take the Internet of Things, for example, a huge interconnected web of devices and services that allows people to control, among many other things, their cars, appliances, and homes remotely.

It’s obvious that such a colossal network generates vast volumes of data that can be compromised. Additionally, AI issues, such as privacy concerns and ethical considerations, become crucial aspects to address in the development and deployment of these technologies.

Similarly, AI-powered chatbots have become indispensable in numerous industries due to their ability to improve customer engagement, handle multiple customer queries at the same time, as well as collect, analyze, and store customer information so that it can be used for personalizing future interactions. Thanks to them, companies can reduce operational costs, allow for great customer experience, create the right marketing messages, and streamline call centre tasks, thus increasing customer retention rates.

Such a colossal network generates vast volumes of data that can be compromised. Additionally, AI privacy issues, such as privacy concerns and ethical considerations, become crucial aspects to address in the development and deployment of these technologies. Furthermore, addressing AI issues is paramount in ensuring the responsible and ethical use of artificial intelligence.

How to use all these advantages of AI without sacrificing privacy?

Scientists are trying to find a way of combining cryptography and machine learning to enable the usage of such data without actually seeing it. This system will protect end users on one hand, and allow companies to leverage their data without breaking the code of ethics. Addressing AI issues in privacy and data protection, this system strives to strike a balance between data utility and user confidentiality.

Balancing AI Innovation with Ethical Responsibility

Striking a balance between AI innovation and ethical responsibility is crucial for achieving a future where AI and privacy coexist harmoniously. It demands collaboration between researchers, developers, policymakers, and individuals to navigate the complexities and ensure that AI is used in a manner that respects privacy rights.

In conclusion, as AI continues to evolve and become an integral part of our lives, it is essential to address the growing concerns surrounding privacy, including AI privacy. By understanding the key components of AI, its impact on personal privacy, the legal perspective, and the role of ethics, we can work towards a future where the benefits of AI are harnessed responsibly while safeguarding privacy rights and navigating potential AI issues.