The cost of consumer technology always declines as time passes. Ever wondered why? Sure, there’s more demand which aids better R&D, which leads to cheaper prices. But this is incomplete without something else – Moore’s law.

Before we start off, a bit of a refresher if you’re hearing this for the first time. Like most laws, this one is named after the man who postulated it – Gordon E. Moore. Moore’s law doesn’t deal with high end particle physics. It involves something incredulously regular, something that we depend upon to a significant extent for the life we live today.

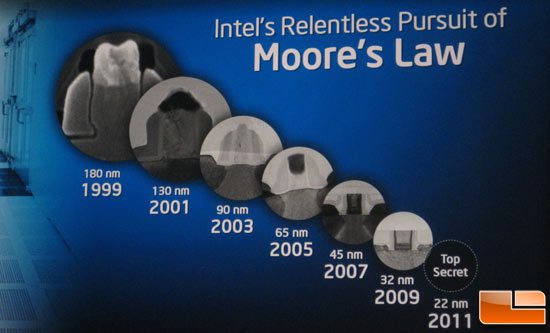

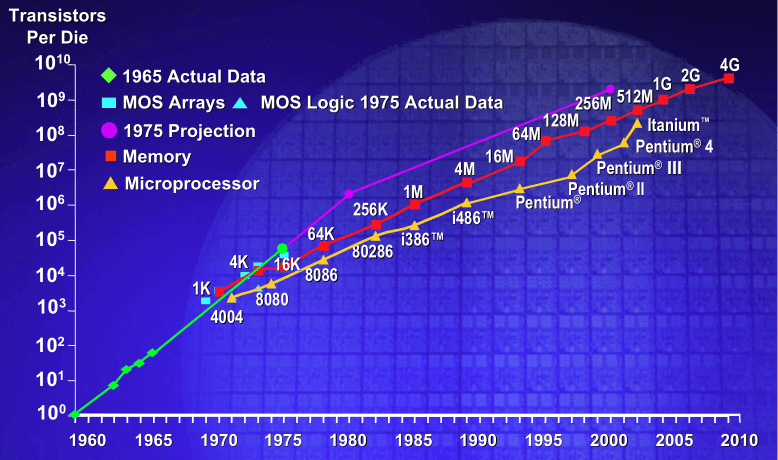

Moore’s law is basically an observation made on transistors and integrated chips. Transistors are semiconductors that control the flow of electrons. Initially when these technological breakthroughs were coming in a couple of decades ago, transistors were huge. They were about 1.3 centimetres in length. Soon began a race to reduce transistor sizes to gain performance. But at the same time, the world oversaw efforts to make it all the more powerful. Miraculously though, we have been actually achieving it nonetheless. In fact, We’ve done it multiple times over.

Related: Quantum Computing and Neural Chips: The Future of Computing after Moore’s Law

Moore’s law states that the number of transistors in an integrated chip doubles every year. This law held during the first few years of its prediction. Later it was strangely valid repeatedly for every eighteen months, and then twenty four. An amazing fact about this law is, though it was merely a prediction, it has stood the test of time. Even better – is predicted that this prediction is expected to hold up for the next few decades. This law has compelled innovation to keep pace, to keep up with the it as it has done in the past fifty years.

But then as speculation (and our understanding of physics) goes – this exponential development is bound to have a dead end. A point will be reached where it is no longer possible to shrink a transistor (when it touches atomic scales), or when it is no longer possible to layer them and to find an even more efficient packaging on a silicon wafer. What happens then?

This limiting point is sufficiently far into the future. Who knows – we may find another way around the issues that may crop up by then.

Additionally, Moore had formulated a second law, stating that as the trend of making dense compact integrated chips go on, the initial capital required to innovate these technologies would also increase.

Also Read: Quantum Computers are the Future

Designing these has been given to micro architects, technocrats and nanotechnologists who still baffle us with their incredible speed of innovation. On the flip side, as these transistors are shrunk, layered etc… so does the complications and cost of innovation increase. As a tribute to the company Moore worked for, let’s get down to a few numbers. Intel core i7 microprocessor has 731 million transistors and its Xeon processors have 1.9 billion transistors. This has narrowed down the scale from centimeters to nanoparticle size, from classical mechanics to quantum mechanics.

Now let us get down to the ground level. How is this going to create an impact on us? This figures prominently in the argument of how computing itself is expected to change. We have entered into the realm of quantum computing. To give you the numbers – when the first integrated chip was made holding a single transistor, it was about 11 to 13 millimeters long. But now a square millimeter of an integrated chip holds 90 million transistors.

What does a transistor do anyway?

Everything. Transistors are the reason our devices have their processing speed grow manifold. Coming to size its obvious, isn’t it? Just compare the PC you had 10 years ago and now. Isn’t it much smaller, lighter and more efficient? We all owe this to those working or rather competing to go on make to processors smaller and lighter. Simply put, More transistors = more speed.

Also Read: Technology we’re looking forward to in the future

What will Moore’s law enable for us in the future? Artificial superintelligence? What will artificial intelligence do? Could AI itself find a way around Moore’s law, and shoot us to seemingly infinite computing capabilities? While there’s a lot of speculation (this WaitButWhy article is a great read) all we can do is wait with baited breath.