Quantum computing isn’t reality yet. But it merits some intense discussions nevertheless. Why? Because it has the potential to change the dynamics of how our systems work today. What’s holding back quantum computing from going mainstream, though?

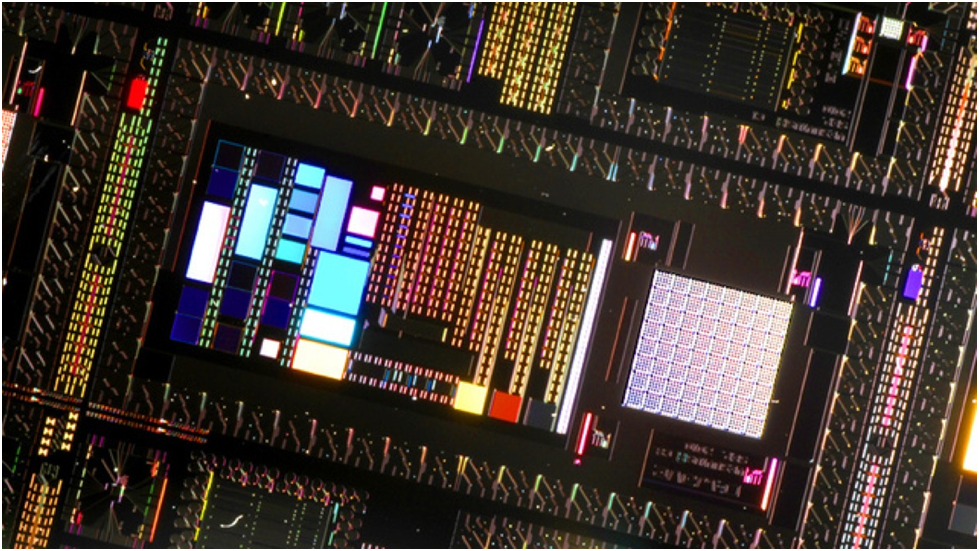

According to Moore’s law, the number of transistors in an integrated chip doubles every two years. But at one point it becomes impossible to reduce the size of a transistor because when a transistor becomes too small, the heat produced in operating it should be enough to collapse the silicon chip. That’d result in a signal processing error.

Related: Quantum Computing and Neural Chips: The Future of Computing after Moore’s Law

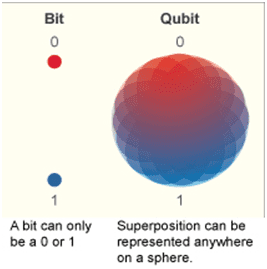

To get around this problem, we’ve been working on quantum computing. The next possible alternative moving forward is entering into the realm of quantum computing. In a classical computer, the basic unit of memory is called a bit, whereas in a quantum computer it is called a quantum bit – a QUBIT. A bit can assume any one of the two values of a binary system – 0 or 1, but in case of a qubit it can assume either one or even both by its unique property of ‘superposition’. Sound confusing? Remind yourself that we are now dealing with quantum mechanics and not classical mechanics. And quantum mechanics is very counter-intuitive.

Anyway, in a traditional system, multiple other parameters such as number of processors required, speed, processing possibilities etc need to be represented. To represent the state of an n-qubit system, the classical bits required would be 2^n. But the issue in this case is that qubits take only those values that are obtained by probabilistic superposition and this also depends on the the initial state of qubits. The qubits at any given point of time may assume values from a given set, depending upon their excitation state. Hence the initial states of the qubits have to be considered as the result varies for a different initial state scenario.

In a deterministic computer the probability of the value chosen is always 1. In a probabilistic computer, it can take any one value from the given set. The sum of the probabilities of choosing one value will add upto 1. But in case of quantum computing the probability for one value is a complex number. The sum total of the squares of coefficients of the probabilities of each value add upto 1. This is one of the major differences between a classical and quantum computer.

Due to the dual state of an electron-particle and wave, the mathematical calculations involved in it becomes complex. Waves tend to superpose and interfere whereas particles tend to entangle.

A classical computer computes in parallel fashion because it’s got several processors linked together. But a quantum processor can simultaneously manage multiple computations from one processor. If we are able to control 50 qubits, we’ll be dealing with more power than that in supercomputers of today.

A quantum algorithm called Shor’s algorithm can be used to solve integer factorization. Quantum computers can easily factorize 300 digit numbers or more in a matter of seconds. A classical computer on the other hand would take billions of years.

Challenges

One of the major issues we face quantum computers is that we need to insulate every single qubit from interacting with the environment. Otherwise we end up with what we call decoherence. When a single qubit ends up interacting with the environment, it isn’t just that single qubit that’s affected. Quantum mechanics is beautiful – due to the properties of entanglement and superposition, the entire system collapses. This decoherence problems remains one of the most significant challenges to quantum computing today.

Another issue we have to deal with is that the correction system used for classical computers is not applicable for quantum computers. All the computers of today rely on some sort of error correction mechanisms to make sure our data remains authentic when it is transmitted. Unfortunately, it doesn’t work the same way with quantum computers. The whole probability thing makes error correction a lot tougher.

The applications of quantum computers are innumerable and we haven’t predicted the entire spectrum of its advantages.

Also Read: The Future of Quantum Computing

The Road Ahead

Quantum computing is staggeringly exciting. The range of applications it’ll have will have any geek salivate. Remember how classical computers never generate truly random numbers? Remember how there is always a pattern involved? In quantum computers, true randomness can be achieved.

The most significant advantage of a quantum computer, of course, will be pure performance. Quantum computers can solve problems that would take today’s computers more than the lifetime of the universe to compute. Pharmacists and molecular biologists can study the interactions of molecules taking place in the human body by simulated quantum effects that can be analyzed by quantum computers and that too in real time.

Quantum computers work on atomic levels or sub-atomic levels whereas typical systems today have a size of 28 nanometres. There is so much potential to develop smaller, lighter and much more efficient systems.

A 12 qubit system is the maximum we have achieved till date. Google is getting into the game and backing further development. While it’s hard to predict a rough timeframe for this technology going mainstream, it’s nice to know we could be using it within our lifetimes.

Quantum computing isn’t reality yet. But it merits some intense discussions nevertheless. Why? Because it has the potential to change the dynamics of how our systems work today. What’s holding back quantum computing from going mainstream, though?

According to Moore’s law, the number of transistors in an integrated chip doubles every two years. But at one point it becomes impossible to reduce the size of a transistor because when a transistor becomes too small, the heat produced in operating it should be enough to collapse the silicon chip. That’d result in a signal processing error.

Related: Quantum Computing and Neural Chips: The Future of Computing after Moore’s Law

To get around this problem, we’ve been working on quantum computing. The next possible alternative moving forward is entering into the realm of quantum computing. In a classical computer, the basic unit of memory is called a bit, whereas in a quantum computer it is called a quantum bit – a QUBIT. A bit can assume any one of the two values of a binary system – 0 or 1, but in case of a qubit it can assume either one or even both by its unique property of ‘superposition’. Sound confusing? Remind yourself that we are now dealing with quantum mechanics and not classical mechanics. And quantum mechanics is very counter-intuitive.

Anyway, in a traditional system, multiple other parameters such as number of processors required, speed, processing possibilities etc need to be represented. To represent the state of an n-qubit system, the classical bits required would be 2^n. But the issue in this case is that qubits take only those values that are obtained by probabilistic superposition and this also depends on the the initial state of qubits. The qubits at any given point of time may assume values from a given set, depending upon their excitation state. Hence the initial states of the qubits have to be considered as the result varies for a different initial state scenario.

In a deterministic computer the probability of the value chosen is always 1. In a probabilistic computer, it can take any one value from the given set. The sum of the probabilities of choosing one value will add upto 1. But in case of quantum computing the probability for one value is a complex number. The sum total of the squares of coefficients of the probabilities of each value add upto 1. This is one of the major differences between a classical and quantum computer.

Due to the dual state of an electron-particle and wave, the mathematical calculations involved in it becomes complex. Waves tend to superpose and interfere whereas particles tend to entangle.

A classical computer computes in parallel fashion because it’s got several processors linked together. But a quantum processor can simultaneously manage multiple computations from one processor. If we are able to control 50 qubits, we’ll be dealing with more power than that in supercomputers of today.

A classical computer computes in parallel fashion because it’s got several processors linked together. But a quantum processor can simultaneously manage multiple computations from one processor. If we are able to control 50 qubits, we’ll be dealing with more power than that in supercomputers of today.

A quantum algorithm called Shor’s algorithm can be used to solve integer factorization. Quantum computers can easily factorize 300 digit numbers or more in a matter of seconds. A classical computer on the other hand would take billions of years.

Challenges

One of the major issues we face quantum computers is that we need to insulate every single qubit from interacting with the environment. Otherwise we end up with what we call decoherence. When a single qubit ends up interacting with the environment, it isn’t just that single qubit that’s affected. Quantum mechanics is beautiful – due to the properties of entanglement and superposition, the entire system collapses. This decoherence problems remains one of the most significant challenges to quantum computing today.

Another issue we have to deal with is that the correction system used for classical computers is not applicable for quantum computers. All the computers of today rely on some sort of error correction mechanisms to make sure our data remains authentic when it is transmitted. Unfortunately, it doesn’t work the same way with quantum computers. The whole probability thing makes error correction a lot tougher.

The applications of quantum computers are innumerable and we haven’t predicted the entire spectrum of its advantages.

Also Read: The Future of Quantum Computing

The Road Ahead

Quantum computing is staggeringly exciting. The range of applications it’ll have will have any geek salivate. Remember how classical computers never generate truly random numbers? Remember how there is always a pattern involved? In quantum computers, true randomness can be achieved.

The most significant advantage of a quantum computer, of course, will be pure performance. Quantum computers can solve problems that would take today’s computers more than the lifetime of the universe to compute. Pharmacists and molecular biologists can study the interactions of molecules taking place in the human body by simulated quantum effects that can be analyzed by quantum computers and that too in real time.

Quantum computers work on atomic levels or sub-atomic levels whereas typical systems today have a size of 28 nanometres. There is so much potential to develop smaller, lighter and much more efficient systems.

A 12 qubit system is the maximum we have achieved till date. Google is getting into the game and backing further development. While it’s hard to predict a rough timeframe for this technology going mainstream, it’s nice to know we could be using it within our lifetimes.